InterGen: Diffusion-based Multi-human Motion Generation under Complex Interactions

"Oppenheimer and Einstein walked side by side in the garden and had profound discussions."

"With fiery passion two dancers entwine in Latin dance sublime."

Abstract

We have recently seen tremendous progress in diffusion advances for generating realistic human motions. Yet, they largely disregard the rich multi-human interactions.

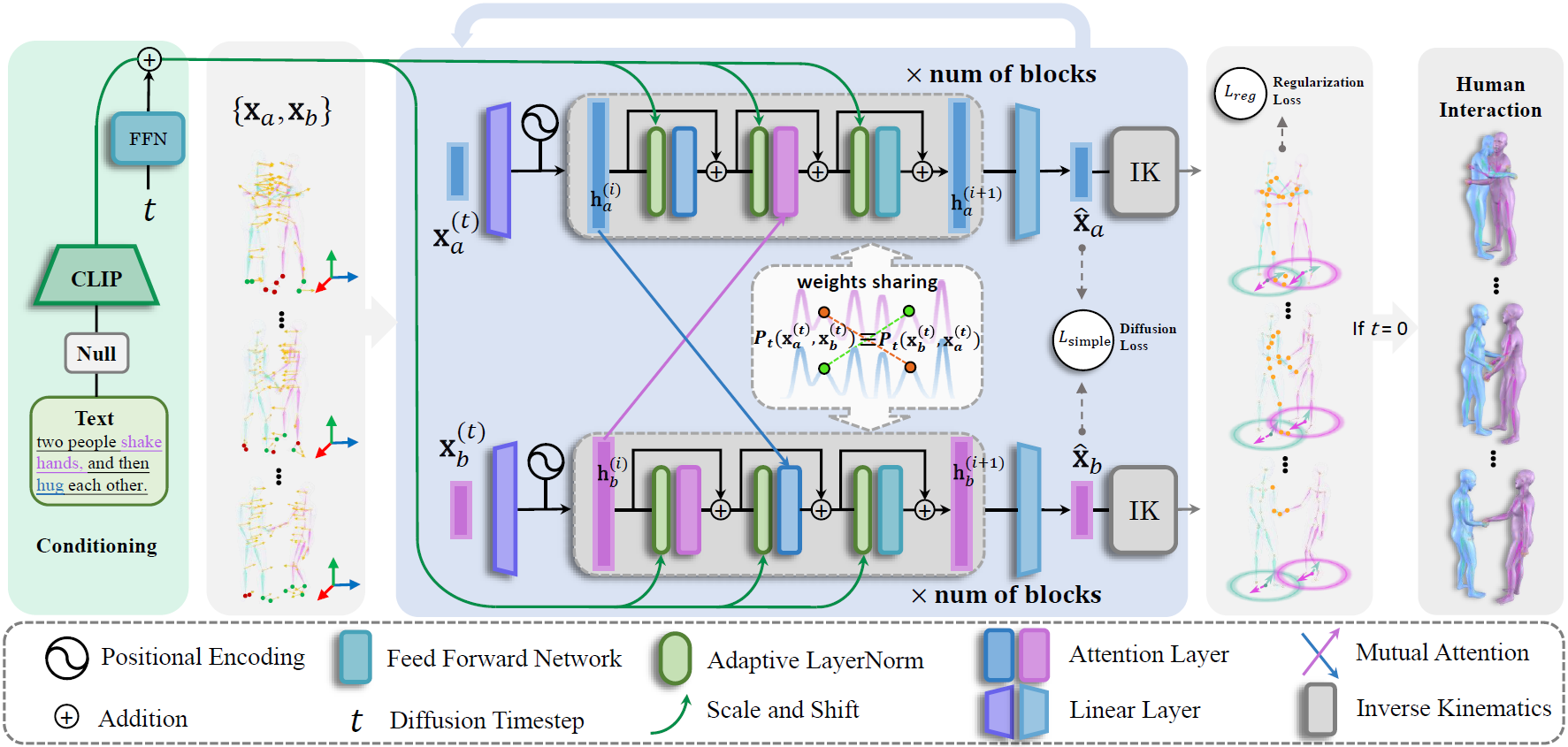

In this paper, we present InterGen, an effective diffusion-based approach that incorporates human-to-human interactions into the motion diffusion process, which enables layman users to customize high-quality two-person interaction motions, with only text guidance.

We first contribute a multimodal dataset, named InterHuman. It consists of about 107M frames for diverse two-person interactions, with accurate skeletal motions and 16,756 natural language descriptions. For the algorithm side, we carefully tailor the motion diffusion model to our two-person interaction setting. Then, we propose a novel representation for motion input in our interaction diffusion model, which explicitly formulates the global relations between the two performers in the world frame. We further introduce two novel regularization terms to encode spatial relations, equipped with a corresponding damping scheme during the training of our interaction diffusion model.

Extensive experiments validate the effectiveness and generalizability of InterGen. Notably, it can generate more diverse and compelling two-person motions than previous methods and enables various downstream applications for human interactions.

Prompt-guided Generation

Our approach enables layman users to customize high-quality and diverse two-person interaction motions, with only text prompt guidance.

"In an intense boxing match, one person attacks the opponent with straight punch, and then the opponent falls over."

"Two good friends jump in the same rhythm to celebrate something."

"Two people bow to each other."

"Two people embrace each other."

"In an intense boxing match, one is continuously punching while the other is defending and counterattacking."

"Two fencers engage in a thrilling duel, their sabres clashing and sparking as they strive for victory."

"The two are blaming each other and having an intense argument"

"In an intense boxing match, one man knocks the other down with a thumping leg."

InterHuman Dataset

InterHuman is a comprehensive, large-scale 3D human interactive motion dataset encompassing a diverse range of 3D motions of two interactive people, each accompanied by natural language annotations.

BibTeX

@article{liang2024intergen,

title={Intergen: Diffusion-based multi-human motion generation under complex interactions},

author={Liang, Han and Zhang, Wenqian and Li, Wenxuan and Yu, Jingyi and Xu, Lan},

journal={International Journal of Computer Vision},

pages={1--21},

year={2024},

publisher={Springer}

}